Speeding Up Your Website’s Database

However, the focus of the discussion is generally on minimizing file sizes, improving server settings and optimizing CSS and Javascript.

The discussion glosses over another important factor: the speed with which your pages are actually put together on your server. Most big modern websites store their information in a database and use a language such as PHP or ASP to extract it, turn it into HTML and send it to the Web browser.

Further Reading on SmashingMag:

- Advanced WordPress Management With WP-CLI

- Why Static Website Generators Are The Next Big Thing

- WordPress Performance Improvements That Can Go Wrong

- Speed Up Your Mobile Website With Varnish

So, even if you get your home page down to 1.5 seconds (Google’s threshold for being considered a “fast” website), you can still frustrate customers if your search page takes too much time to respond, or if the product pages load quickly but the “Customer reviews” delay for several seconds.

Google’s threshold for a fast-loading website is about 1.5 seconds. This screenshot comes from Google Webmaster Tools (go to [domain name] → Diagnostics → Site Performance).

This article looks at these sorts of issues and describes some simple ways to speed up your website by optimizing your database. It starts with common knowledge but includes more complex techniques at the end, with links to further reading throughout. The article is intended for fearless database beginners and designers who have been thrown in at the deep end.

What Is A Database? What Is SQL?

A database is basically a collection of tables of information, such as a list of customers and their orders. It could be a filing cabinet, a bunch of spreadsheets, a Microsoft Access file or Amazon’s 40 terabytes of book and customer data.

A typical database for a blog has tables for users, categories, posts and comments. WordPress includes these and a few other starter tables. A typical database for an e-commerce website has tables for customers, products, categories, orders and order items (for the contents of shopping baskets). The open-source e-commerce software Magento includes these and many others. Databases have many other uses — such as for content management, customer relations, accounts and invoicing, and events — but these two common types (i.e. for a blog and an e-commerce website) will be referenced throughout this article.

Some tables in a database are connected to other tables. For example, a blog post can have many comments, and a customer can make multiple orders (these are one-to-many relationships). The most complicated type of database relationship is a many-to-many relationship. One relationship is at the core of all e-commerce databases: an order can contain many products, and a single product can be added to many different orders. This is where the “order items” table comes in: it sits between the products and the orders, and it records every time a product is added to an order. This will be relevant later on in the article, when we look at why some database queries are slow.

The word database also refers to the software that contains all this data, as in “My database crashed while I was having breakfast,” or “I really need to upgrade my database.” Popular database software include Microsoft Access 2010, Microsoft SQL Server, MySQL, PostgreSQL and Oracle Database 11g.

The acronym SQL comes up a lot when dealing with databases. It stands for “structured query language” and is pronounced “sequel” or “es-cue-el.” It’s the language used to ask and tell a database things — exciting things like SELECT lastname FROM customers WHERE city=‘Brighton’. This is called a database query because it queries the database for data. There are other types of database statements: INSERT for putting in new data, UPDATE for updating existing data, DELETE for deleting things, CREATE TABLE for creating tables, ALTER TABLE and many more.

How Can A Database Slow Down A Website?

A brand new empty website will run very fast, but as it grows and ages, you may notice some sluggishness on certain pages, particularly pages with complicated bits of functionality. Suppose you wanted to show “Customers who bought this product also bought…” at the bottom of a page of products. To extract this information from the database, you would need to do the following:

- Start with the current product,

- See how many times the product has recently been added to anyone’s shopping basket (the “order items” table from above),

- Look at the orders related to those shopping baskets (for completed orders only),

- Find the customers who made those orders,

- Look at other orders made by those customers,

- Look at the contents of those orders’ baskets (the “order items” again),

- Look up the details of those products,

- Identify the products that appear the most often and display them.

You could, in fact, do all of that in one massive database query, or you could split it up over several different queries. Either way, it might run very quickly when your database has 20 products, 12 customers, 18 orders and 67 order items (i.e. items in shopping baskets). But if it is not written and programmed efficiently, then it will be a lot slower with 500 products, 10,000 customers, 14,000 orders and 100,000 order items, and it will slow down the page.

This is a very complicated example, but it shows what kind of stuff goes on behind the scenes and why a seemingly innocuous bit of functionality can grind a website to a halt.

A website could slow down for many other reasons: the server running low on memory or disc space; another website on the same server consuming resources; the server sending out a lot of emails or churning away at some other task; a software, hardware or network fault; a misconfiguration. Or it may have suddenly become a popular website. The next two sections, therefore, will look at speed in more detail.

Is It My Database?

There are now several ways to analyze your website’s speed, including the Firebug plug-in for Firefox, the developer tools in Google Chrome (press Shift + Control + I, and then go to Resources → Enable Resource Tracking) and Yahoo YSlow. There are also websites such as WebPagetest, where you can enter a URL, and it will time it from your chosen location.

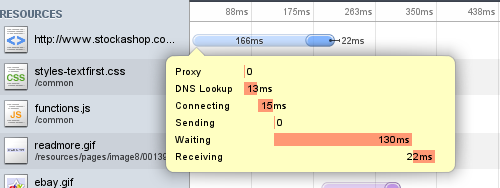

All of these tools will show you a diagram of all of the different resources (HTML, images, CSS and JavaScript files) used by your page, along with how long each took to load. They will also break down the time taken to perform a DNS lookup (i.e. to convert your domain name into an IP address), the time taken to connect to your server, the time spent waiting for your server to reply (aka “time to first byte”), and the time spent receiving (i.e. downloading) the data.

Many Web pages are constructed in their entirety by the Web server, including by PHP that accesses the database, and then sent to the browser all at once, so any database delays would lead to a long waiting time, and the receiving/downloading time would be proportional to the amount of data sent. So, if your 20 kB HTML page has a quick connection, a waiting time of 5 seconds and a download time of 0.05 seconds, then the delay would occur on the server, as the page is being built.

Not all Web pages are like this, though. The PHP flush function forces the server to send the HTML that it has already built to the browser right away. Any further delays would then be in the receiving time, rather than the waiting time.

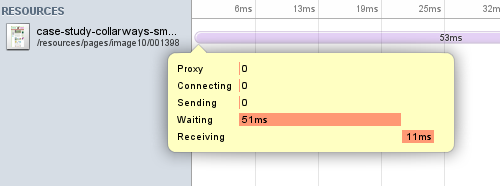

Either way, you can compare the waiting/receiving time for your suspected slow and complicated Web page to the waiting time for a similarly sized HTML page (or image or other static resource) on the same server at the same time. This would rule out the possibility of a slow Internet connection or an overloaded server (both of which would cause delays) and allow you to compare the times taken to construct the pages. This is not an exact science, but it should give you some indication of where things are being held up.

The screenshots below show the analysis provide by Google Chrome’s Developer Tools of a 20 kB Web page versus a 20 kB image. The Web page waited 130 milliseconds (ms) and downloaded for 22 ms. The image waited for 51 ms and downloaded for 11 ms. The download/receiving times are about the same, as expected, but the server is spending about 80 ms extra on processing and constructing the Web page, which entails executing the PHP and calling the database.

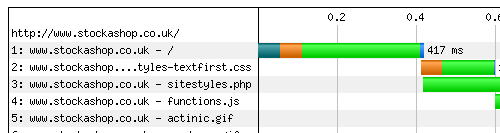

When performing these tests, analyze the static resource by itself and click “Refresh,” so that you are not getting a quick cached version. Also, run each a few times to ensure that you’re not looking at a statistical anomaly. The third screenshot below shows that WebPagetest indicates almost double the time of Google for the same page at the same time, demonstrating that using the same environment for all tests is important.

Resource analysis using Google Chrome’s Developer Tools, showing a 130-ms wait time for a Web page.

The same tool, showing a 51-ms wait time for an image of about the same size.

Resource analysis of the same page from WebPagetest, with a 296-ms wait time and a 417-ms total time.

How To Time A Database Query In PHP And MySQL

The approach above was general; we can now get very specific. If you suspect that your database might be slowing down your website, then you need to figure out where the delay is coming from. I will define a couple of timing functions, and then use them to time every single database query that is run by a page. The code below is specific to PHP and MySQL, but the method could be used on any database-driven website:

function StartTimer ($what=’) {

global $MYTIMER; $MYTIMER=0; //global variable to store time

//if ($_SERVER['REMOTE_ADDR'] != '127.0.0.1') return; //only show for my IP address

echo '<p style="border:1px solid black; color: black; background: yellow;">';

echo "About to run <i>$what</i>. "; flush(); //output this to the browser

//$MYTIMER = microtime (true); //in PHP5 you need only this line to get the time

list ($usec, $sec) = explode (' ', microtime());

$MYTIMER = ((float) $usec + (float) $sec); //set the timer

}

function StopTimer() {

global $MYTIMER; if (!$MYTIMER) return; //no timer has been started

list ($usec, $sec) = explode (' ', microtime()); //get the current time

$MYTIMER = ((float) $usec + (float) $sec) - $MYTIMER; //the time taken in milliseconds

echo 'Took ' . number_format ($MYTIMER, 4) . ' seconds.</p>'; flush();

}StartTimer starts the timer and also prints whatever you are trying to time. The second line is a check of your IP address. This is very useful if you are doing this (temporarily) on a live website and don’t want everyone in the world to see the timing messages. Uncomment the line by removing the initial //, and replace the 127.0.0.1 with your IP address. StopTimer stops the timer and displays the time taken.

Most modern websites (especially well-programmed open-source ones) have a lot of PHP files but query the database in only a handful of places. Search through all of the PHP files for your website for mysql_db_query or mysql_query. Many software development packages such as BBEdit have functions to perform searches like this; or, if you are familiar with the Linux command line, try this:

grep mysql_query find . -name *php

You may find something like this:

mysql_query ($sql);For WordPress 3.0.4, this is on line 1112 of the file wp-includes/wp-db.php. You can copy and paste the functions above into the top of this file (or into any PHP file that is included by every page), and then add the timer before and after the mysql_query line. It will look like this:

StartTimer ($query);

$this->result = @mysql_query( $query, $dbh );

StopTimer();Below is a partial screenshot of this being done on a brand new WordPress installation. It is running about 15 database queries in total, each taking about 0.0003 seconds (0.3 ms); so, less than 5 ms in total, which is to be expected for an empty database.

This shows and times all of the database queries that WordPress runs.

If you have found this line in other commonly used systems, please share this information by adding to the comments for this article.

You can also do other interesting things with it: you can see how fast your computer is compared to mine. Counting to 10 million takes my computer 2.9420 seconds. My Web server is a bit faster at 2.0726 seconds:

StartTimer ('counting to 10000000');

for ($i=0; $i<10000000; $i++); //count to a high number

StopTimer();Notes on the Results

This technique gives you only comparative results. If your server was very busy at that moment, then all of the queries would be slower than normal. But you should have at least been able to determine how long a fast query takes on your server (maybe 1 to 5 ms), and therefore identify the slow-ish ones (200+ ms) and the really slow ones (1+ second). You can run the test a few times over the course of an hour or day (but not immediately after — see the section below about the database cache) to make sure you’re not getting a fluke.

This will also most likely severely mess up the graphical presentation of the page. It may also give you PHP warnings like “Cannot modify header information. Headers already sent by…” This is because the timing messages are interfering with cookie and session headers. As long as the page still displays below the warnings, you can ignore them. If the page does not display at all, then you may need to put the StartTimer and StopTimer around specific blocks of code, rather than around mysql_query.

This technique is essentially a quick hack to show some rough results. It should not be left on a live website.

What Else Could It Be?

If your database queries are not particularly slow, but the construction of your Web page is, then you might just have poorly written code. You can put the timer statements above around bigger and bigger blocks of code to see if and where the delay is occurring. It could be that you are looping through 10,000 full rows of product information, even if you are displaying only 20 product names.

Profiling

If you are still baffled and/or want more complete and accurate information about what’s happening in your code, you could try a debugging and profiling tool such as Xdebug, which analyzes a local copy of your website. It can even visually show where bottlenecks are occurring.

Indexing Database Tables

The experiment above may have surprised you by showing just how many database queries a page on your website is running, and hopefully, it has helped you identify particularly slow queries.

Let’s look now at some simple improvements to speed things up. To do this, you’ll need a way to run database queries on your database. Many server administration packages (like cPanel or Plesk) provide phpMyAdmin for this task. Alternatively, you could upload something like phpMiniAdmin to your website; this single PHP file enables you to look at your database and run queries. You’ll need to enter your database name, user name and password. If you don’t know these, you can usually find them in your website’s configuration file, if it has one (in WordPress, it’s wp-config.php).

Among the database queries that your page runs, you probably saw a few WHERE conditions. This is SQL’s way of filtering out results. For instance, if you are looking at an “Account history” type of page on your website, there is probably a query like this to look up all of the orders someone has placed. Something like this:

SELECT * FROM orders WHERE customerid = 2;This retrieves all orders placed by the customer with the database ID 2. On my computer, with 100,000 orders in the database, running this took 0.2158 seconds.

Columns like customerid — which deal with a lot of WHERE conditions with = or < or > and have many possible values, should be indexed. This is like the index at the back of a book: it helps the database quickly retrieve indexed data. This is one of the quickest ways to speed up database queries.

What to Index

In order to know which columns to index, you need to understand a bit about how your database is being used. For example, if your website is often used to look up categories by name or events by date, then these columns should be indexed.

SELECT * FROM categories WHERE name = 'Books';

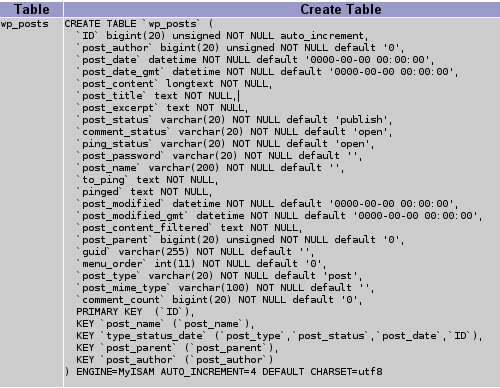

SELECT * FROM events WHERE startdate >= '2011-02-07';Each of your database tables should already have an ID column (often called id, but sometimes ID or articleid or the like) that is listed as a PRIMARY KEY, as in the wp_posts screenshot below. These PRIMARY KEYs are automatically indexed. But you should also index any columns that refer to ID numbers in other tables, such as customerid in the example above. These are sometimes referred to as FOREIGN KEYs.

SELECT * FROM orders WHERE customerid = 2;

SELECT * FROM orderitems WHERE orderid = 231;If a lot of text searches are being done, perhaps for descriptions of products or article content, then you can add another type of index called a FULL TEXT index. Queries using a FULL TEXT index can be done over multiple columns and are initially configured to work only with words of four or more letters. They also exclude certain common words like about and words that appear in more than 50% of the rows being searched. However, to use this type of index, you will need to change your SQL queries. Here is a typical text search, the first without and the second with a FULL TEXT index:

SELECT * FROM products WHERE name LIKE '%shoe%' OR description LIKE '%shoe%';

SELECT * FROM products WHERE MATCH(name,description) AGAINST ('shoe');It may seem that you should go ahead and index everything. However, while indexing speeds up SELECTs, it slows down INSERTs, UPDATEs and DELETEs. So, if you have a products table that hardly ever changes, you can be more liberal with your indexing. But your orders and order items tables are probably being modified constantly, so you should be more sparing with them.

There are also cases where indexing may not help; for example, if most of the entries in a column have the same value. If you have a stock_status column that stores a value of 1 for “in stock,” and 95% of your products are in stock, then an index wouldn’t help someone search for in-stock products. Imagine if the word the was indexed at the back of a reference book: the index would list almost every page in the book.

SELECT * FROM products WHERE stock_status = 1;How to Index

Using phpMyAdmin or phpMiniAdmin, you can look at the structure of each database table and see whether the relevant columns are already indexed. In phpMyAdmin, click the name of the table and browse to the bottom where it lists “Indexes.” In phpMiniAdmin, click “Show tables” at the top, and then “sct” for the table in question; this will show the database query needed to recreate the table, which will include any indices at the bottom — something like KEY ‘orderidindex’ (‘orderid’).

Using phpMiniAdmin to check for indices in the WordPress wp_posts table.

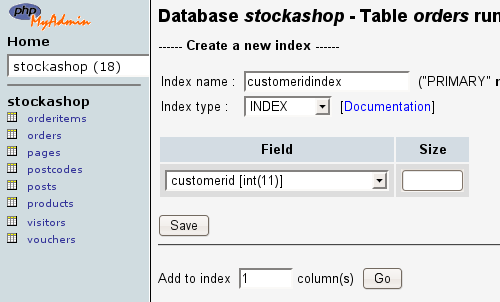

If the index does not exist, then you can add it. In phpMyAdmin, below the index, it says “Create an index on 1 columns”; click “Go” here, enter a useful name for the index (like customeridindex), choose the column on the next page, and press “Save,” as seen in this screenshot:

Indexing a column using phpMyAdmin.

In phpMiniAdmin, you’ll have to run the following database statement directly in the large SQL query box at the top:

ALTER TABLE orders ADD INDEX customeridindex (customerid);Running the query again after indexing takes only 0.0019 seconds on my computer, 113 times faster.

Adding a FULL TEXT index is a similar process. When you run searches against this index, you must list the same columns:

ALTER TABLE articles ADD FULLTEXT(title,author,articletext);

SELECT * FROM articles WHERE MATCH(title,author,articletext) AGAINST ('mysql');Back-Ups and Security

Before altering your database tables in any way, make a back-up of the whole database. You can do this using phpMyAdmin or phpMiniAdmin by clicking “Export.” Especially if your database contains customer information, keep the back-ups in a safe place. You can also use the command mysqldump to back up a database via SSH:

mysqldump --user=myuser --password=mypassword

--single-transaction --add-drop-table mydatabase

> backup`date +%Y%e%d`.sqlThese scripts also represent a security risk, because they make it much easier for someone to steal all of your data. While phpMyAdmin is often provided securely though your server management software, phpMiniAdmin is a single file that is very easy to upload and forget about. So, you may want to password-protect it or remove it after usage.

Optimizing Tables

MySQL and other kinds of database software have built-in tools for optimizing their data. If your tables get modified a lot, then you can run the tools regularly to make the database tables smaller and more efficient. But they take some time to run (from a few seconds to a few minutes or more, depending on the size of the tables), and they can block other queries from running on the table during optimization, so doing this at a non-busy time is best. There’s also some debate about how often to optimize, with opinions ranging from never to once in a while to weekly.

To optimize a table, run database statements such as the following in phpMyAdmin or phpMiniAdmin:

OPTIMIZE TABLE orders;For example, before I optimized my orders table with 100,000 orders, it was 31.2 MB in size and took 0.2676 seconds to run SELECT * FROM orders. After its first ever optimization, it shrunk to 30.8 MB and took only 0.0595 seconds.

The PHP function below will optimize all of the tables in your database:

function OptimizeAllTables() {

$tables = mysql_query ('SHOW TABLES'); //get all the tables

while ($table = mysql_fetch_array ($tables))

mysql_query ('OPTIMIZE TABLE ' . $table[0]); //optimize them

}Before calling this function, you have to connect to your database. Most modern websites will connect for you, so you don’t need to worry about it, but the relevant MySQL calls are shown here for the sake of completeness:

mysql_connect (DB_HOST, DB_USER, DB_PASSWORD);

mysql_select_db (DB_NAME);

OptimizeAllTables();Making Sure To Use The Cache

Just as a Web browser caches copies of pages you visit, database software caches popular queries. As above, the query below took 0.0019 seconds when I ran it the first time with an index:

SELECT * FROM orders WHERE customerid=2;Running the same query again right away takes only 0.0004 seconds. This is because MySQL has remembered the results and can return them a second time without looking them up again.

However, many news websites and blogs might have queries like the following to ensure that articles are displayed only after their published date:

SELECT * FROM posts WHERE publisheddate <= CURDATE();

SELECT * FROM articles WHERE publisheddate <= NOW();These queries cannot be cached because they depend on the current time or date. In a table with 100,000 rows, a query like the one above would take about 0.38 seconds every time I run it against an unindexed column on my computer.

If these queries are run on every page of your website, thousands of times per minute, it would speed things up considerably if they were cacheable. You can force queries to use the cache by replacing NOW or CURDATE with an actual time, like so:

SELECT * FROM articles WHERE publisheddate <= '2011-01-17 17:00';You can use PHP to make sure the time changes every five minutes or so:

$time = time();

$currenttime = date ('Y-m-d H:i', $time - ($time % 300));

mysql_query (“SELECT * FROM articles WHERE publisheddate <= '$currenttime'”);The percentage sign is the modulus operator. % 300 rounds the time down to the last 300 seconds or 5 minutes.

There are other uncacheable MySQL functions, too, like RAND.

Outgrowing Your Cache

Outgrowing your MySQL cache can also make your website appear to slow down. The more posts, pages, categories, products, articles and so on that you have on your website, the more related queries there will be. Take a look at this example:

SELECT * FROM articles WHERE publisheddate <= '2011-01-17 17:00' AND categoryid=12It could be that when your website had 500 categories, queries like this one all fit in the cache together and all returned in milliseconds. But with 1000 regularly visited categories, they keep knocking each other out of the cache and returning much slower. In this case, increasing the size of the cache might help. But giving more server RAM to your cache means spending less on other tasks, so consider this carefully. Plenty of advice is available about turning on and improving the efficiency of your cache by setting server variables.

When Caching Doesn’t Help

A cache is invalidated whenever a table changes. When a row is inserted, updated or deleted, all queries relying on that table are effectively cleared from the cache. So, if your articles table is updated every time someone views an article (perhaps to count the number of views), then the improvement suggested above might not help much.

In such cases, you may want to investigate an application-level cacher, such as Memcached, or read the next section for ideas on making your own ad-hoc cache. Both require much bigger programming changes than discussed up to now.

Making Your Own Cache

If a particularly viscous database query takes ages but the results don’t change often, you can cache the results yourself.

Let’s say you want to show the 20 most popular articles on your website in the last week, using an advanced formula that takes into account searches, views, saves and “Send to a friend” hits. And you want to show these on your home page in an unordered (<ul>) HTML list.

It might be easiest to use PHP to run the database query once an hour or once a day and save the full list to a file somewhere, which you can then include on your home page.

Once you have written the PHP to create the include file, you could take one of a couple approaches to scheduling it. You could use your server’s scheduler (in Plesk 8, go to Server → Scheduled Tasks) to call a PHP page every hour, with a command like this:

wget -O /dev/null -q https://www.mywebsite.co.uk/runhourly.phpAlternatively, you could get PHP to check whether the file is at least an hour old before running the query — something like this, where 3600 is the number of seconds in an hour:

$filestat = stat ('includes/complicatedfile.html');

//look up information about the file

if ($filestat['mtime'] < time()-3600) RecreateComplicatedIncludeFile();

//over 1 hour

readfile ('includes/complicatedfile.html');

//include the file into the pageReturning to the involved example above for “Customers who bought this product also bought…,” you could also cache items in a new database column (or table). Once a week or so, you could run that long set of queries for each and every product, to figure out which other products customers are buying. You could then store the resulting product ID numbers in a new database column as a comma-separated list. Then, when you want to select the other products bought by customers who bought the product with the ID 12, you can run this query:

SELECT * FROM products WHERE FIND_IN_SET(12,otherproductids);Reducing The Number Of Queries By Using JOINs

Somewhere in the management and control area of your e-commerce website is probably a list of your orders with the names of the customers who made them.

This page might have a query like the following to find all completed orders (with a status value indicating whether an order has been completed):

SELECT * FROM orders WHERE status>1;And for each order it comes across, it might look up the customer’s details:

SELECT * FROM customers WHERE id=1;

SELECT * FROM customers WHERE id=2;

SELECT * FROM customers WHERE id=3;

etcIf this page shows 100 orders at a time, then it has to run 101 queries. And if each of those customers looks up their delivery address in a different table, or looks for the total charge for all of their orders, then the time delay will start to add up. You can make it much faster by combining the queries into one using a JOIN. Here’s what a JOIN looks like for the queries above:

SELECT * FROM orders INNER JOIN customers

ON orders.customerid = customers.id WHERE orders.status>=1;Here is another way to write this, without the word JOIN:

SELECT * FROM orders, customers

WHERE orders.customerid = customers.id AND orders.status>=1;Restructuring queries to use JOINs can get complicated because it involves changing the accompanying PHP code. But if your slow page runs thousands of database statements, then it may be worth a look. For further information, Wikipedia offers a good explanation of JOINs. The columns with which you use a JOIN (customerid in this case) are also prime candidates for being INDEXed.

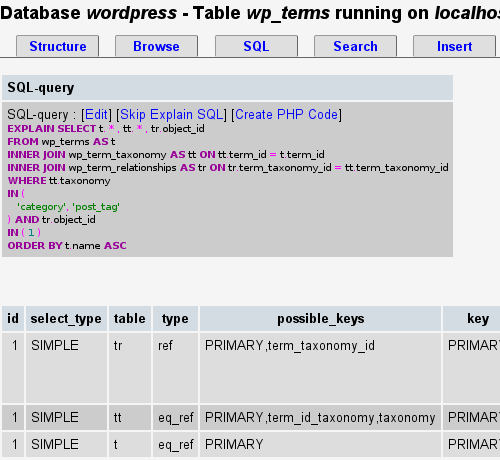

You could also ask MySQL to EXPLAIN a database query. This tells you which tables it will use and provides an “execution plan.” Below is a screenshot showing the EXPLAIN statement being used on one of the more complex WordPress queries from above:

Using the EXPLAIN statement to explain how MySQL plans to deal with a complex query.

The screenshot shows which tables and indices are being used, the JOIN types, the number of rows analyzed, and a lot more information. A comprehensive page on the MySQL website explains what the EXPLAIN explains, and another much shorter page goes over how to use that information to optimize your queries (by adding indices, for instance).

…Or Just Cheat

Finally, returning again to the advanced example above for “Customers who bought this product also bought…,” you could also simply change the functionality to be something less complicated for starters. You could call it “Recommended products” and just return a few other products from the same category or return some hand-picked recommendation.

Conclusion

This article has shown a number of techniques for improving database performance, ranging from simple to quite complex. While all well-built websites should already incorporate most of these techniques (particularly the database indices and JOINs), the techniques do get overlooked.

There is also a lot of debate on forums around the Web about the effectiveness and reliability of some of these techniques (i.e. measuring speed, indexing, optimization, how best to use the cache, etc.), so the advice here is not definitive, but hopefully it gives you an overview of what’s available.

If your website starts to mysteriously slow down after a few months or years, you will at least have a starting point for figuring out what’s wrong.

Flexible CMS. Headless & API 1st

Flexible CMS. Headless & API 1st