What Leap Motion And Google Glass Mean For Future User Experience

Editor's note: Please note that this article explores an entirely hypothetical scenario, and these are opinions, some of which you may not agree with. However, the opinions are based on current trends, statistics and existing technology. If you’re the kind of designer who is interested in developing the future, the author encourages you to read the sources that are linked throughout the article.

With the Leap Motion controller being released on July 22nd and the Google Glass Explorer program already live, it is obvious that our reliance on the mouse or even the monitor to interact with the Web will eventually become obsolete.

The above statement seems like a given, considering that technology moves at such a rapid pace. Yet in 40 years of personal computing, our methods of controlling our machines haven’t evolved beyond using a mouse, keyboard and perhaps a stylus. Only in the last six years have we seen mainstream adoption of touchscreens.

Given that emerging control devices such as the Leap Controller are enabling us to interact with near pixel-perfect accuracy in 3-D space, our computers will be less like dynamic pages of a magazine and more like windows to another world. To make sure we’re on the same page, please take a minute to check out what the Leap Motion controller can do:

_“Introducing the Leap Motion”_

Thanks to monitors becoming portable with Google Glass (and the competitors that are sure to follow), it’s easy to see that the virtual world will no longer be bound to flat two-dimensional surfaces.

In this article, we’ll travel five to ten years into the future and explore a world where Google Glass, Leap Motion and a few other technologies are as much a part of our daily lives as our smartphones and desktops are now. We’ll be discussing a new paradigm of human-computer interface.

The goal of this piece is to start a discussion with forward-thinking user experience designers, and to explore what’s possible when the mainstream starts to interact with computers in 3-D space.

Setting The Stage: A Few Things To Consider

Prior to the introduction of the iPhone in 2007, many considered the smartphone to be for techies and business folk. But in 2013, you’d be hard pressed to find someone in the developed world who isn’t checking their email or tweeting at random times.

So, it’s understandable to think that a conversation about motion control, 3-D interaction and portable monitors is premature. But if the mobile revolution has taught us anything, it’s that people crave connection without being tethered to a stationary device.

To really understand how user experience (UX) will change, we first have to consider the possibility that social and utilitarian UX will be taking place in different environments. In the future, people will use the desktop primarily for utilitarian purposes, while “social” UX will happen on a virtual layer, overlaying the real world (thanks to Glass). Early indicators of this are that Facebook anticipates its mobile growth to outpace its PC growth and that nearly one-seventh of the world’s population own smartphones.

The only barrier right now is that we lack the technology to truly merge the real and virtual worlds. But I’m getting ahead of myself. Let’s start with something more familiar.

The Desktop

Right now, UX on the desktop cannot be truly immersive. Every interaction requires physically dragging a hunk of plastic across a flat surface, which approximates a position on screen. While this is accepted as commonplace, it’s quite unnatural. The desktop is the only environment where you interact with one pixel at a time.

Sure, you could create the illusion of three dimensions with drop shadows and parallax effects, but that doesn’t change the fact that the user may interact with only one portion of the screen at a time.

This is why the Leap Motion controller is revolutionary. It allows you to interact with the virtual environment using all 10 fingers and real-world tools in 3-D space. It is as important to computing as analog sticks were to video games.

The Shift In The Way We Interact With Machines

To wrap our heads around just how game-changing this will be, let’s go back to basics. One basic UX and artificial intelligence test for any new platform is a simple game of chess.

(Image: Wikimedia Commons)

In the game of chess below, thanks to motion controllers and webcams, you’ll be able to “reach in” and grab a piece, as you watch your friend stress over which move to make next.

Now you can watch your opponent sweat. (Image: Algernon D’Ammassa)

In a game of The Sims, you’ll be able to rearrange furniture by moving it with your hands. CAD designers will use their hands to “physically” manipulate components (and then send their design to the 3-D printer they bought from Staples for prototyping.)

While the lack of tactile feedback might deter mainstream adoption early on, research into haptics is already enabling developers to simulate physical feedback in the real world to correspond with the actions of a user’s virtual counterpart. Keep this in mind as you continue reading.

Over time, this level of 3-D interactivity will fundamentally change the way we use our desktops and laptops altogether.

Think about it: The desktop is a perfect, quiet, isolated place to do more involved work like writing, photo editing or “hands-on” training to learn something new. However, a 3-D experience like those mentioned above doesn’t make sense for social interactions such as on Facebook or even reading the news, which are more becoming of mobile.

With immersive, interactive experiences being available primarily via the desktop, it’s hard to imagine users wanting these two experiences to share the same screen.

So, what would a typical desktop experience look like?

Imagine A Cooking Website For People Who Can’t Cook

With this cooking website for people who can’t cook, we’re not just talking about video tutorials or recipes with unsympathetic instructions, but rather immersive simulations in which an instructor leads you through making a virtual meal from prep to presentation.

Interactions in this environment would be so natural that the real design challenge is to put the user in a kitchen that’s believable as their own.

You wouldn’t click and drag the icon that represents sugar; you would reach out with your virtual five-fingered hand and grab the life-sized “box” of Domino-branded sugar. You wouldn’t click to grease the pan; you’d mimic pushing the aerosol nozzle of a bottle of Pam.

The Tokyo Institute of Technology has already built such a simulation in the real world. So, transferring the experience to the desktop is only a matter of time.

“Cooking simulator will help you cook a perfect steak every time”

UX on the future desktop will be about simulating physics and creating realistic environments, as well as tracking head, body and eyes to create intuitive 3-D interfaces, based on HTML5 and WebGL.

Aside from the obvious hands-on applications, such as CAD and art programs, the technology will shift the paradigm of UX and user interface (UI) design in ways that are currently difficult to fathom.

The problem right now is that we currently lack a set of clearly defined 3-D gestures to interact with a 3-D UI. Designing UIs will be hard without knowing what our bodies will have to do to interact.

The closest we have right now to defined gestures are those created by Kinect hackers and John Underkoffler of Oblong Technology (the team behind Minority Report’s UI).

In his TED talk from 2010, Underkoffler demonstrates probably the most advanced example of 3-D computer interaction that you’re going to see for a while. If you’ve got 15 minutes to spare, I highly recommend watching it:

John Underkoffler’s talk “Pointing to the Future of UI”

Now, before you start arguing, “Minority Report isn’t practical — humans aren’t designed for that!” consider two things:

- We won’t likely be interacting with 60-inch room-wrapping screens the way Tom Cruise does in Minority Report; therefore, our gestures won’t need to be nearly as big.

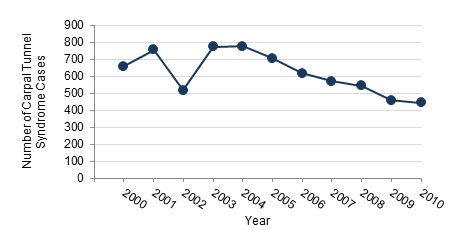

- The human body rapidly adapts to its environment. Between the years 2000 and 2010, a period when home computers really went mainstream, reports of Carpal Tunnel Syndrome dropped by nearly 8%.

(Image: Minnesota Department of Health)

However, because the Leap Motion controller is less than $80 and will be available at Best Buy, this technology isn’t just hypothetical, sitting in a lab somewhere, with a bunch of geeks saying “Wouldn’t it be cool if…”

It’s real and it’s cheap, which really means we’re about to enter the Wild West of true 3-D design.

Social Gets Back To The Real World

So, where does that leave social UX? Enter Glass.

It’s easy to think that head-mounted augmented reality (AR) displays, such as Google Glass, will not be adopted by the public, and in 2013 that might be true.

But remember that we resisted the telephone when it came out, for many of the same privacy concerns. The same goes for mobile phones and for smartphones around 2007.

So, while first-generation Glass won’t likely be met with widespread adoption, it’s the introduction of a new phase. ABI Research predicts that the wearable device market will exceed 485 million annual shipments by 2018.

According to Steve Lee, Glass’ product director, the goal is to “allow people to have more human interactions” and to “get technology out of the way.”

First-generation Glass performs Google searches, tells time, gives turn-by-turn directions, reports the weather, snaps pictures, records video and does Hangouts — which are many of the reasons why our phones are in front of our faces now.

Moving these interactions to a heads-up display, while moving important and more heavy-duty social interactions to a wrist-mounted display, like the Pebble smartwatch, eliminates the phone entirely and enables you to truly see what’s in front of you.

(Image: Pebble)

Now, consider the possibility that something like the Leap Motion controller could become small enough to integrate into a wrist-mounted smartwatch. This, combined with a head-mounted display, would essentially give us the ability to create an interactive virtual layer that overlays the real world.

Add haptic wristband technology and a Bluetooth connection to the smartwatch, and you’ll be able to “feel” virtual objects as you physically manipulate them in both the real world and on the desktop. While this might still sound like science fiction, with Glass reportedly to be priced between $299 and $499 and Leap Motion at $80 and Pebble being $150, widespread affordability of these technologies isn’t entirely impossible.

Social UX In The Future: A Use Case

Picture yourself walking out of the mall, and your close friend Jon updates his status. A red icon appears in the top right of your field of vision. Your watch displays Jon’s avatar, which says, “Sooo hungry right now.”

You say, “OK, Glass. Update status: How about lunch? What do you want?” and keep walking.

“Tacos.”

You say, “OK, Glass. Where can I get good Mexican food?” 40 friends have favorably rated Rosa’s Cafe. Would you like directions? “Yes.” The navigation starts, and you’re en route.

You reach the cafe, but Jon is 10 minutes away. Would you like an audiobook while you wait? “No, play music.” A smart playlist compiles exactly 10 minutes of music that perfectly fits your mood.

“OK, Glass. Play Angry Birds 4.”

Across the table, 3-D versions of the little green piggies and their towers materialize.

In front of you are a red bird, a yellow bird, two blue birds and a slingshot. The red bird jumps up, you pull back on the slingshot, the trajectory beam shows you a path across the table, you let go and knock down a row of bad piggies.

Suddenly, an idea comes to you. “OK, Glass. Switch to Evernote.”

A piece of paper and a pen are projected onto the table in front of you, and a bulletin board appears to the left.

You pick up the AR pen, jot down your note, move the paper to the appropriate bulletin, and return to Angry Birds.

You could make your game visible to other Glass wearers. That way, others could play with you — or, at the very least, would know you’re not some crazy person pretending to do… whatever you’re doing across the table.

When Jon arrives, notifications are disabled. You push the menu icon on the table and select your meal. Your meal arrives; you take photos of your food; eat; publish to Instagram 7.

Before you leave, the restaurant gives a polite notification, letting you know that a coupon for 10% off will be sent to your phone if you write a review.

How Wearable Technology Interacts With Desktops

Later, having finished the cooking tutorial on the desktop, you decide it’s time to make the meal for real. You put on Glass and go to the store. The headset guides you directly to the brands that were advertised “in game.” After picking out your ingredients, you receive a notification that a manufacturer’s coupon has been sent to your phone and can be used at the check-out.

When you get home, you lay a carrot on the cutting board and an overlay projects guidelines on where to cut. You lay out the meat, and a POW graphic is overlaid, showing you where to hit for optimal tenderness:

You put the meat in the oven; Glass starts the timer. You put the veggies in the pan; Glass overlays a pattern to show where and when to stir.

While you were at the store, Glass helped you to pick out the perfect bottle of wine to pair with your meal (based on reviews, of course). So, you pour yourself a glass and relax while you wait for the timer to go off.

In the future, augmented real-world UX experiences will be turned into real business. The more you enhance real life, the more successful your business will be. After all, is it really difficult to imagine this cooking experience being turned into a game?

What Can We Do About This Today?

If you’re the kind of UI designer who seeks to push boundaries, then the best thing you can do right now is think. Because the technology isn’t 100% available, the best you can do is open your imagination to what will be possible when the average person has evolved beyond the keyboard and mouse.

Draw inspiration from websites and software that simulate depth to create dynamic, layered experiences that can be easily operated without a mouse. The website of agency Black Negative is a good example of future-inspired “flat” interaction. It’s easy to imagine interacting with this website without needing a mouse. The new Myspace is another.

To go really deep, look at the different Chrome Experiments, and find a skilled HTML5 and WebGL developer to discuss what’s in store for the future. The software and interactions that come from your mind will determine whether these technologies will be useful.

Conclusion

While everything I’ve talked about here is conceptual, I’m curious to hear what you think about how (or even if) these devices will affect UIs. I’d also love to hear your vision of future UIs.

To get started, let me ask you two questions:

- How will the ability to reach into the screen and interact with the virtual world shape our expectations of computing?

- How will untethering content from flat surfaces fundamentally change the medium?

I look forward to your feedback. Please share this article if you’ve enjoyed this trip into the future.

Further Reading

- Designing For Smartwatches And Wearables

- Bringing Your App’s Data To Every User’s Wrist With Android Wear

- Developing For Apple Watch Without The Device

- Intimate And Interruptive: Designing For The Power Of Apple Watch

- How We Designed And Built Our First Apple Watch App

Flexible CMS. Headless & API 1st

Flexible CMS. Headless & API 1st

Register!

Register!