Designing For The Internet Of Emotional Things

More and more of our experience online is personalized. Search engines, news outlets and social media sites have become quite smart at giving us what we want. Perhaps Ali, one of the hundreds of people I’ve interviewed about our emotional attachment to technology, put it best:

"Netflix's recommendations have become so right for me that even though I know it's an algorithm, it feels like a friend."

Personalization algorithms can shape what you discover, where you focus attention, and even who you interact with online. When these algorithms work well, they can feel like a friend. At the same time, personalization doesn’t feel all that personal. There can be an uncomfortable disconnect when we see an ad that doesn’t match our expectations. When personalization tracks too closely to interests that we’ve expressed, it can seem creepy. Personalization can create a filter bubble by showing us more of what we’ve clicked on before, rather than exposing us to new people or ideas.

Your personalization algorithm, however, has only one part of the equation – your behaviors. The likes, videos, photos, locations, tags, comments, recommendations and ratings shape your online experience. But so far, your apps and sites and devices don’t know how you feel. That’s changing.

Emotions are already becoming a part of our everyday use of technology. Emotional responses like angry, sad and wow! are now integrated into our online emotional vocabulary as we start to use Facebook’s reactions. Some of us already use emotion tracking apps, like Moodnotes, not only to understand our emotions but to drive positive behavior change.

Emotion-sensing technology is moving from an experimental phase to a reality. The Feel wristband and the MoodMetric ring use sensors that read galvanic skin response, pulse, and skin temperature to detect emotion in a limited way. EmoSPARK is a smart home device that creates an emotional profile based on a combination of word choice, vocal characteristics and facial recognition. This profile is used to deliver music, video and images according to your mood.

Wearables that can detect physical traces like heart rate, blood pressure and skin temperature give clues about mood. Screens that detect facial expressions are starting to be mapped to feelings. Text analysis is becoming more sensitive to nuance and tone. Now voice analysis is detecting emotion too. Affective computing, where our devices take inputs from multiple sources like sensors, audio and pattern recognition to detect emotion, is starting to become a real part of our experience with technology.

Maybe, just maybe, the Internet of Things will start to really get us – understanding our emotions, making us aware of them, helping us to take action on our emotions. What does this mean for how we design technology?

It may be difficult to imagine all the ways that artificial intelligence with emotion-sensing or emotionally intelligent technology, will shape experience. Let’s start by looking at the most familiar type of emotionally intelligent technology: a conversational app.

The Internet Of Emotional Things

When we think of an emotional interaction with technology, we often think of a conversation. From Joseph Weizenbaum’s ELIZA project in the mid 1960s, which used natural language processing to simulate conversation, to science fiction stories like the movie Her, conversation and emotion are bound together. It seems natural. It seems like it hasn’t really been designed.

Of course, emotion-sensing experiences will have to be designed. Algorithms can only go so far without a designer. Algorithms translate various data inputs into an output that is an experience. Designers have a role in determining and training the input, with sensitivity to ambiguity and context. The output, whether on a screen or not, is a set of interactions that need to be designed.

For instance, the conversation you have with Siri is, of course, designed. The way your emotions are displayed on your mood-tracking app is designed. Even the interplay between artificial intelligence and a human assistant on a travel-planning app is designed.

As emotionally intelligent technology evolves, interactions will extend beyond conversational apps. Personalization, whether recommendations or news feeds, will rely on a layer of data from emotion-sensing technology in the near future too.

The next generation of emotion-sensing technology will challenge designers to really get in touch with emotions, positive and negative. Emotionally intelligent technology will convey and evoke emotions on four levels:

- Revealing emotion to ourselves

- Revealing our emotions to others

- Developing recommendations based on our emotions

- Communicating in more human ways

Revealing Our Emotions To Ourselves

The quantified-self movement started out as niche, with homegrown tools and meet-ups. Now, tracking our health, physical and mental, is becoming more common. Current research estimates that one in ten people own a fitness tracker of some kind. Add in smartwatches and that number may be higher.

Because we want to know ourselves better, and maybe want to design better lives for ourselves, tracking our emotions, especially stress triggers or peak moments, potentially adds another layer of understanding. Reflecting on our emotions may be a positive thing, allowing us to savor experiences or cultivate happiness. Of course, there are dangers too. Revealing and reflecting on negative emotions may cause anxiety or exacerbate mental illness.

Revealing Our Emotions To Others

Early work in affective computing focused on helping children with autism, people with post-traumatic stress disorder (PTSD), or people with epilepsy. Rosalind Picard, founder of affective computing, has developed emotion-sensing technology to detect small signals to help caregivers know what is coming and aid behavior change.

It’s easy to see how parents of toddlers or people with aging parents could benefit from emotion-sensing technology to facilitate communication, but it won’t be limited to specialized use. Just as we share our fitness milestones, or compare notes on strategies for our physical health, we are also likely to share our emotions using technology. Maybe we will share emotions with text messages, through our clothing – maybe even with butterflies.

Chubble is an example of an app that lets you share live moments with your friends and see their emotional reaction in real time. In other words, emotionally intelligent technology will let us share our emotions explicitly and in more subtle ways too.

Emotionally intelligent technology will also have to know when not to share emotions, or simply prevent you from inadvertently sharing emotion. It will also have to know how to provide context and history. Even the best of intentions can go wrong. Consider Samaritans Radar, which alerts you to signs of depression from friends’ social media feeds. It could easily miss context and history, in addition to leaving your friend vulnerable to people with bad intentions.

Developing Recommendations Based On Our Emotions

We have already noted that emotionally intelligent technology can make us aware of our own emotions and communicate those emotions to others. The next step is to offer recommendations based on those emotions. Rather than recommendations based on past purchases, like Amazon’s, or places you’ve been, like Foursquare’s recommendations, imagine recommendations based on aggregated data from emotion-sensing technology. The emotional state of many people could be matched up with your current state to result in something like: “People feeling energetic like you go to Soul Cycle at Union Square.”

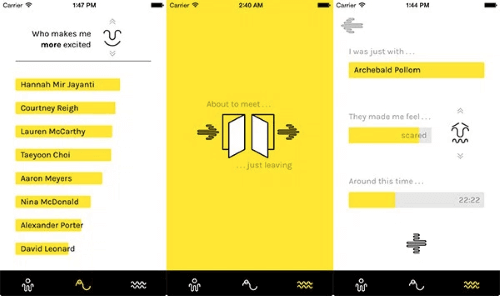

Although a little tongue in cheek, pplkpr is an example of an emotionally intelligent recommendation tool. Pplkpr asks users questions about how they feel in an app and pairs it with physiological data collected through a wristband to tell you which friends and colleagues are better for your mental health. This speculative app is designed, in part, to call into question the ethics of emotions. For example, what do you do when your app tells you a close friend is bad for your health?

Communicating In A More Human Way

When devices can understand emotions, they can adjust the way they communicate with us. Siri is fun to prank because she usually doesn’t get when we are joking. Imagine if she did. Emotionally intelligent robots will learn our emotions and show emotion in a rudimentary way. Pepper, an emotion-sensing robot companion, is not just popular with consumers but is being used by companies to provide customer support services.

In the near future, emotion will be designed into the experience. For example, the text your mobile wallet sends to tell you that your impulse clothing purchase won’t make you happy will be designed. The screen on your refrigerator that cautions you to wait 20 minutes before deciding to binge on ice cream after a stressful day will be designed. The visualization of your child’s emotion data during a school day, and suggested responses, will also be designed.

Designing technology that collects data, maps it to emotions, and then conveys or evokes emotion in various ways means developing a greater overall sensitivity to emotion. This presents a few challenges to the current experience design process that is in place in many organizations. It means greater collaboration between algorithm designers and experience designers. It will also mean a change in our approach to emotional design.

The Emotional Gap

Design is about solving problems. It’s a mantra for anyone working in design. As designers, we neatly map out problems in steps and reduce friction so that people don’t have to spend a lot of effort on everyday tasks. We try to bring technology to a vanishing point.

Designing for emotion is not always part of the design process, though. Thanks to Don Norman’s insights in Emotional Design, we know that design can have a deep emotional impact as well as behavioral and cognitive ones. This work has prompted designers to add moments of delight to the foundations of useful and usable design.

While this is a good start, there is still a big gap. As designers we strive to end the customer journey with a smile, but we haven’t really worked out what to do with the rest of the emotions. Even positive emotion is sketched in broad strokes rather than considered, as emotions seem complicated and a little uncomfortable.

Design researchers are mixing more methods than ever before to understand experience, ranging from ethnographic interviews to usability testing to analytics. The focus is primarily on observed behavior, measurable actions, or reported thoughts. Gestures and facial expressions may be noted but it’s certainly not the type of data that is available through analytics. Biometrics are still not the norm in the research process because, until recently, it has been too expensive and time-consuming. Whether from qualitative research or quantitative sources, teams simply don’t have a lot of data about emotion readily available to inform design.

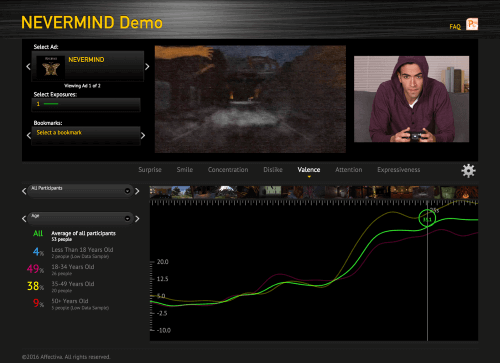

But soon, designers will have more data about emotion. Emotion-sensing data will come from a variety of sources. Technology that translates facial expressions to emotions, like Affectiva or Kairos, will inform the work of game designers and other experiences that prompt very strong emotional responses. Nevermind is one of the first games developed with the Affectiva API, combining physiological biofeedback tracked through a wristband with a webcam to read facial expressions and eye-tracking. The results are that the game can read and respond to emotions like fear, stress, and maybe joy.

Voice is another way that emotion-sensing technology will work. Beyond Verbal recognizes tone and prosody to identify a spectrum of human emotion. Right now it’s being used in a customer service context, but as apps become conversational, expect that it will be more commonly used. It’s already employed in some connected home products. For example, Withings currently offers a wireless camera for the home with cry detection.

Social listening is becoming sensitive to a range of emotions rather than just identifying positive and negative. Blurrt and Pulsar experiment with a combination of text and image analysis to detect emotions. There’s even emoji analytics. Emogi is an analytics tool that offers emotion data around emoji use.

More apps and sites will layer in ways to collect data about emotion and use it as part of the design. Facebook’s reactions don’t just give people a way to express more emotional range, it gives designers (and marketers) emotional data too. Apple’s recent purchase of Emotient’s facial recognition tool means that many apps will start to add an emotional layer to core features. Ford is developing a conversational interface attuned to emotion.

Certainly, there are limitations to matching behavioral and physical patterns against assumptions about state of mind. Hopefully, emotion-sensing technology won’t trivialize emotions. It may do the opposite. Facial recognition can detect fleeting expressions that we may not consciously register or we might otherwise miss. Consistently recording physical signs of emotion means that our apps will be attuned to the signs of emotion in a way that we are not. There may come a time quite soon when our devices start to understand our emotions better than we do ourselves. How will we use this data to shape emotional experiences?

Just having more data about emotion doesn’t automatically create a more human experience, though. Including data about emotions in the design process will have the biggest impact on three key aspects of design. Data about emotion provides a new dimension of context around behavior, anticipatory experiences, and personalization algorithms.

Predictably Rational

Right now our devices perform rationally, and operate as if we are rational too. Even irrational biases are often understood and implemented with rational rules. For instance, the bandwagon effect, where people are influenced to do something because other people do it, is typically addressed by prominently featuring testimonials. Loss aversion, the tendency to prefer avoiding losses to acquiring gains, can be counterbalanced by the right approach to trial periods.

Emotion sensing doesn’t negate a behavioral approach but introduces a new level of context around rational and irrational behavior. Think, for instance, of Amazon recommendations based on your emotional reaction to a product, like a grimace or an eye roll, rather than based on your previous purchases or those of others.

Invisibly Designed

A key goal for design, especially when it comes to the connected home or wearables, is for the interface to get out of the way. You don’t want to spend time with the Internet of Annoying Things constantly interrupting and demanding attention. This approach has to make assumptions based on backwards-facing and fragmented behavioral data such as browsing history, purchases, interactions and perhaps credit reporting agency data.

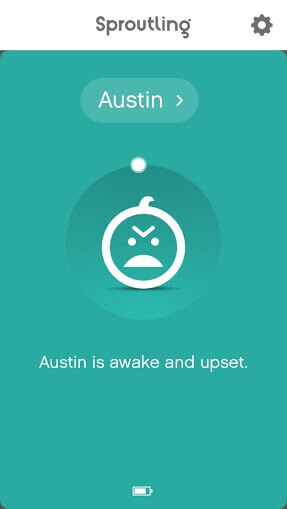

Adding in a layer of emotional intelligence can bring that experience to the immediate present rather than being grounded only in past data. Imagine your Nest cam picks up on your baby making sounds during a nap, but because it has voice sensing for emotion, it also lets you know that the baby is 90% likely to settle back down.

Generically Personal

Personalization is hard to design well. Somewhere between users interviewed in initial design research and the behavioral data that fuel the algorithms, there is a real person. With enough data, algorithms can replicate existing patterns but it’s more difficult to allow systems to organize around emergent behaviors. Personalization stays in the past. Humans are in motion toward the future.

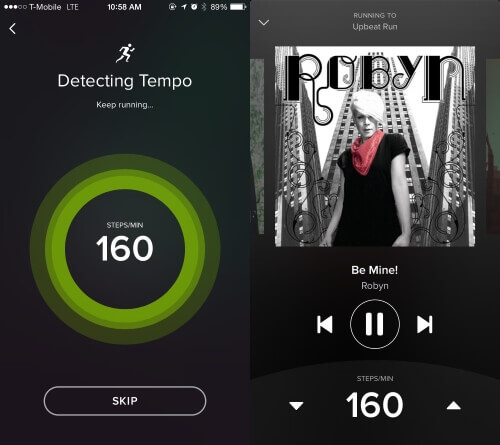

Tuning in to emotions could introduce a new layer of understanding. Rather than selecting a curated list called Confidence Boost, imagine instead that Spotify picks up on signals from your emotion-sensing wristband to develop a custom playlist for your mood.

At the heart of emotionally intelligent technology lies a paradox, though. Emotion promises to bring greater empathy to bear on our experiences, and yet it will be used to guide and shape your emotions. Right now, for instance, data about emotion is used mostly for testing ads or marketing brands on social media. The privacy and ethics around the Internet of Emotional Things is no small matter. Rather than rushing to commercialize emotion, the key will be calibrating what we expect technology to do.

Since designers are creating the experience that people will engage with, we should have an important role in that conversation. So, despite the potential negatives, how can we design emotionally intelligent technology that might actually have a net positive effect on our lives?

Toward More Emotionally Intelligent Design Of Technology

To actively design the Internet of Emotional Things, we will have to adopt new practices.

Rethink Easy

Designers strive for simplicity. Reducing friction is a given. The result, however, is that the experience may be flattened because it is about efficiency first. When we consider emotion, designers may actually want to create a little friction. An invisible experience is not always an emotional one. Get inspired by the research at the Affective Computing Lab at MIT to see how invisible design and design that explicitly engages emotion might come together.

Design For Pre-experience And Re-experience

Customer journeys sketch pain points and positive touchpoints, still mostly for in-the-moment, in-app experience. As design becomes more emotionally driven, understanding anticipation and memory will start to demand more thoughtful attention. Watch Daniel Kahneman’s TED talk about experience and memory to get started.

Build In Sensory Experience

Emotion-sensing technology relies on more than just text, images, or even emojis. It’s more than what appears on a screen. This technology relies on expression, gesture and voice to understand emotion, so this means designers need to think beyond a visual experience. Physical spaces, from retail stores to urban transportation to gardens, are designed for the senses. Take a field trip to a local children’s museum to experience what it means to design for the senses.

Embrace Emotional Complexity

With our Internet of Things becoming more emotionally attuned to us, designers will need to become more sensitive to emotional granularity. Not just happy and sad, pain or pleasure. Developing a working vocabulary around emotional nuance will be essential. Understanding differences between emotion, mood, and cognition will not be optional. Start developing your emotional sensitivity by using Mood Meter or trying out an app like Feelix that tries to bridge emotion and design.

Do Empathy One Better

Empathy, as a design practice, will start to rely on new ways of ethnographic research and design thinking – from stepping into an experience using virtual reality to spending a day in someone else’s Facebook account. Empathy needs to move from focusing only on real-life experience to the experience people have living with algorithms and emotion sensing. The Design and Emotion Society’s collection of tools and techniques can get you started.

Bridge The Algorithmic Self And The Actual Person

Designers, with a practice grounded in empathy, can provide the link between the algorithmic shadow self – created from links clicked and posts liked and people followed and purchases made – and the actual person. Designing ways to pair human intelligence and artificial intelligence will be a hallmark of emotionally intelligent design. Look at how Crystal uses artificial intelligence to create a personality profile and then pairs it with human input.

Emotions aren’t a problem to be solved. People are complicated. People change. People are often a little conflicted. Algorithms can have a hard time with that. Emotion-sensing data promises to balance machine logic with a more human touch, but it won’t be successful without human designers.

Now, context and empathy are at the center of design practices. Next, designers can work toward broadening the range of emotions and senses that are considered as part of the design. In the coming wave of emotionally intelligent and mood-aware Internet of Things, the designer’s role will be more important than ever and practices must adapt one step ahead of development.

Other Resources

- “Affective Computing,” Rosalind Picard

Picard’s book lays the foundation for emotionally intelligent technology. The intellectual framework discussed in the first part of the book is essential reading. - “Emotional Design,” Don Norman

You may be conversant with the chapters on visceral, behavioral and reflective design. Reread the final chapters about where emotions and technology will intersect in the near future. Norman is remarkably prescient. You may also want to look at a short video about Cynthia Breazeal’s Kismet, the emotionally intelligent robot that Norman discusses in the last chapters of the book. - “Emotions Revealed,” Paul Ekman

If you have read Malcolm Gladwell’s Blink, you are already familiar with Paul Ekman. His facial action coding system, which maps emotions like anger, fear, sadness, disgust and happiness to our facial expressions, is the foundation of emotion-sensing tools like Kairos and Emotient. If you want something more technical, Ekman’s book Emotion in the Human Face documents the research. To see how it works, try out Affectiva’s facial coding demo. - “Design for Real Life,” Eric Meyer and Sara Wachter-Boettcher

The next wave of emotional design will need to be more than empathetic. It will need to be compassionate. This book is a glimpse into the future of some of the issues designers may face. - “Heartificial Intelligence,” John C. Havens

As technology is more sensitive to emotion, ethical concerns will be pronounced. Haven’s book is a considered and playful exploration of the kinds of scenarios designers may have to think about in the near future.

Further Reading

- The Personality Layer

- Not Just Pretty: Building Emotion Into Your Websites

- Designing For Emotion With Hover Effects

- Give Your Website Soul With Emotionally Intelligent Interactions

Register for free to attend Axe-con

Register for free to attend Axe-con

Celebrating 10 million developers

Celebrating 10 million developers

Register now for WAS 2026

Register now for WAS 2026