Ethical Design: The Practical Getting-Started Guide

By now, most people working in tech know and feel the deep concerns related to surveillance capitalism fostered and upheld by the tech giants. We understand that the root of the problem lies within the business model of capitalising and monetising user data. Stories of how people are being exploited surface on a daily basis, like the recent story about how Instagram withholds like notifications to certain users, with the purpose of increasing the rate of which they open the app. In the same story, The Globe and Mail describe how former high level employees of Facebook are growing a conscience and tell horrifying stories about how features are meticulously being built to exploit human behavior and make us addicts of social media.

As designers and developers we have an obligation to build experiences that are better than that. This article explains how unethical design happens, and how to do ethical design through a set of best practices. It also helps you understand how you can plant the seed to change the meaning within the company you work for and in the design community, even if you are not part of the management layer. Change starts with a movement!

Ethical Design

Let’s start with the core terminology: According to Merriam Webster, ethics is “the discipline dealing with what is good and bad and with moral duty and obligation.” For the purpose of this article, ethics will be defined as a system of moral principles that defines what is perceived as good and evil. Ethical design is, therefore, design made with the intent to do good, and unethical design is its black hat counterpart.

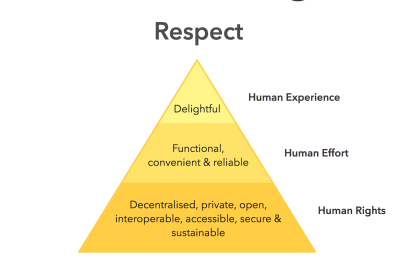

Ind.ie is a social enterprise striving for justice in the digital age. It is founded by Aral Balkan and Laura Kalbag who defined an “Ethical Hierarchy of Needs” that describe the core of ethical design very well.

As with any pyramid-shaped structure, the layers in the Ethical Hierarchy of Needs rest on the layer below it. If any layer is broken, the layers resting on top of it will collapse. If a design does not support human rights, it is unethical. If it supports human rights but does not respect human effort by being functional, convenient and reliable (and usable!), then it is unethical. If it respects human effort but does not respect human experience by making a better life for the people using it, then it is still unethical.

From a practical viewpoint, this means that products and services which exploit user data, use dark patterns and generally are only out to make money, disregarding its human purpose, are unethical. Let’s look at how unethical design manifests itself in business models and design decisions.

Unethical Design: The Black Hat Of The Business

Surveillance Capitalism

Data-driven design can be used to do good. But more often than not it is used with monetary intent also known as surveillance capitalism.

As Aral Balkan, ethical designer and founder of ind.ie puts it:

“When a company like Facebook improves the experience of its products, it’s like the massages we give to Kobe beef: they’re not for the benefit of the cow but to make the cow a better product. In this analogy, you are the cow.”

Surveillance capitalism is unethical by nature because at its core, it takes advantage of rich data to profile people and understand their behavior with the sole purpose of making money. The most chilling thought of all is how data is being used not just to predict and manipulate current behavior, but how it is used to profile our future selves through machine learning, ultimately giving companies the power to impact our future decisions and behavioral patterns.

As Cracked Labs, independent research institute and creative laboratory, states in their report about Data Against People:

“Systems that make decisions about people based on their data produce substantial adverse effects that can massively limit their choices, opportunities, and life-chances”.

This happens on a daily basis to everyone who use Facebook, where the individualized feed is carefully filtered to show the posts most likely to trigger engagement and activity. Pricing is also becoming increasingly individualized because companies are able to use rich data to assess the long-term value of customers, also known as data-driven persuasion.

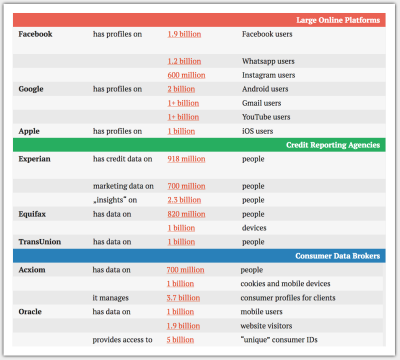

Data trade and data tracking is big business. According to the report “Corporate Surveillance in Everyday Life”, Oracle provides access to 5 billion (yes, billion!) unique user ID’s (this is confirmed on Oracle’s website). The word “scared” does not cover the emotional state which we should all be in over that fact.

One can only imagine how companies will be able to utilize data to profile which of us are more likely to develop mental health or physical issues, thus putting us in the “no thanks” pile of applications for our future jobs. With this in mind, I fear for the future of my children.

To some, the above may sound like something out of a science fiction movie, but it is not far-fetched at all. Unethical companies are exposed daily, from the VPN app who claimed to protect the data of their 24 million users but sold it to Facebook to the software called Alphonso (which, according to an article in The New York Times, is used in more than 250 game apps — some of which are designed for children) to monitor what TV ads people watch — even if the app is not in active use. The list of companies who harvest and use data with deeply unethical purposes goes on and on and on.

Black Hat Design

Nevertheless, data tracking is not the only way that unethical design plays out. Dark patterns fall under unethical design too, as they are black hat design patterns specifically designed to trick us into doing something we don’t necessarily want to do.

It may not be considered unethical when a company makes use of the dark pattern called Roach Motel to make it nearly impossible to delete your account (looking at you, Skype). But looking at the motivation on the business side of things, it is not hard to see the unethical nature of the design (let’s not forget the Chinese shoe company who tricked people to swipe by adding a fake strand of hair on their Instagram ad).

Harry Brignull, one of the originators and driving forces behind Dark Patterns, states that dark patterns work because they take advantage of the human brain’s weaknesses and the way we are hard-wired. That counts as unethical in my book, and we have a moral obligation to the people who design these products to do better.

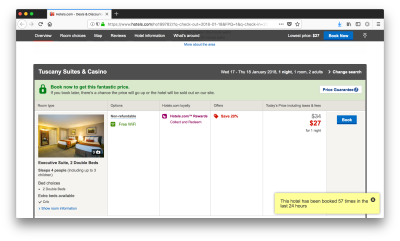

Businesses who nurture consumerism and manipulate people into buying more stuff are unethical by design. It is common practice in e-commerce, and it takes advantage of a phenomenon called “loss aversion”. Hotels.com is particularly aggressive, sending several notifications within seconds about how many people have booked and are looking at the same room as you.

The General Data Protection Regulation

The effectuation of the General Data Protection Regulation (GDPR) in late May 2018 will be an efficient tool in the fight against companies that are unethical by design.

GDPR is an extensive regulation that amongst the highlights include:

- The requirement of any organization that collects data to do so in a secure manner by design;

- Heavy fines for data breaches;

- Data must only be collected after explicit consent, and the language used to explain why the data is collected must be plain and simple. In addition, consent must be withdrawable at any time and must be as easy to do as it was giving it (which, by the way, includes

Sign up→Delete profile); - “The right to be forgotten,” meaning that people have the right to have their data deleted;

- The right for people to get access to their personal data in any organization, alongside information about how this data is processed;

- Data portability, meaning that people have the right to get hold of their data in one company and transfer it to another company;

- Heavy fines for non-compliance of GDPR.

All in all, May 25, 2018, is a pretty good day for the people, and a sign of a much different future for all the unethically designed organizations out there.

But let’s not kid ourselves and think that change will happen overnight or across all organizations in a heartbeat. A deeper change is needed in order to make that happen over time.

Change Happens Through Change Of Meaning

GDPR is not likely to solve all of our problems. Believing that would be naive. That’s why it will continue to be up to the people within company walls to make a difference. The good news is that making a change is possible even if you’re not part of the management team who decides on the business model.

This type of change towards a more ethical design approach is not claimed to happen overnight. Rather, it is possible to make changes incrementally and foster long-term, organizational change through what Don Norman and Roberto Verganti call Meaning-driven Innovation (read more in their article about “Incremental and Radical Innovation”).

Meaning-driven innovation is a result of people starting to articulate new thoughts that create new dynamics, which ultimately lead to radically new meanings.

Don Norman and Roberto Verganti say that radical innovation only happens when one of the following things occur:

- A new enabling technology is available in a reliable, economical form (it took 20 years for touch interfaces to exit the labs and enter the phone and tablet market);

- When the meaning of something changes in society — also referred to as meaning-driven innovation.

I think that the increasing awareness, focus, and concern about our privacy in respect to data-driven businesses will in a not so distinct future spark radical innovation and change in society. Ironically, the companies who founded surveillance capitalism have also sparked a change in how we perceive our right to privacy, and we are already starting to see companies and organizations innovate and foster privacy driven solutions.

The meaning of companies who track and surveil us is already changing. We used to not think very hard about, and maybe even find it convenient when ads served on Facebook were based on our browsing history. But with the escalation of surveillance capitalism, I will argue that we are going through a meaning change as an increasing mass of people are finding it not only uncomfortable but directly unacceptable to be spied on in the name of a business.

DuckDuckGo, a search engine that doesn’t track, had an average of 16 million search queries per day in 2017, a sign that privacy is an increasing concern among people when using the web. Also, we are seeing apps devoted to privacy reaching markets, such as Signal, a secure phone and messenger app designed to protect privacy. Furthermore, it is highly likely that GDPR will spark further meaning change related to privacy.

Transition To A Human-Centered Design Approach

Human-Centered Design (HCD) is a philosophy developed by Don Norman (among others). According to the User Experience Professionals Association (UXPA), HCD is defined by “the active involvement of users and a clear understanding of user and task requirements”.

Don Norman and Roberto Verganti conclude in their substantial study that HCD is only suitable for incremental innovation — step by step improvements — because new ideas are not discovered while constantly looking at the existing state of things as it is done through user research.

While this sounds reasonable, I believe that HCD can prove to be the offset to meaning-driven innovation, ultimately leading to a broad meaning change about what people will accept and won’t accept from unethical organizations. The reason why I believe this, is that HCD fosters a deeper sense of empathy than any other experience design method.

Human-Centered Design is a framework as well as a mindset. At its core, working “human-centered” means involving the people you serve early and continuously in the process, i.e. using research to establish the needs of these people, understanding what problems they have, and how your product can help solve these problems.

It falls within the natural sphere of experience designers to work human-centered, but what do you do if your job is in design and development, and you are constantly occupied by sprint reviews and daily tasks?

While working with a remote development team, I learned that the developers didn’t have any contact with the people who used the product. This often led to heated discussions where statements like “I think…”, “From a technical perspective…”, and “I feel…” were the main arguments.

The biggest problem with basing decisions on what you think and feel, or what is easiest from a technical perspective, is that it doesn’t involve the people you are serving. The people who your product or service is put in the world to solve problems for. That’s where HCD comes in.

UX designers and researchers typically conduct research, document the insights and bring them forward in a refined state to the design- and product team in the shape of personas, user needs descriptions, user flows, journeys, and so on. And that’s all well and good. The problem is, however, that the distance between the organisation and people you serve remains large, because no one except the UX designers talked to them or have seen them use the product. So they keep going back to “I feel…”, “I think…” and “From a technical perspective…”

To help establish empathy towards the people you serve, there are a couple of very impactful things designers and developers — and the rest of the organization — can do (and can ask for from the UX team).

Involve all team members in watching videos from user testing sessions.

Actually going through the pains and delights of the people who use your products (or prototypes, depending on what you’re testing) is worth every second. It cannot be stressed enough how important it is to watch other people interact with and comment on the stuff you’re building (and no, your team doesn't count as “people” here!).

If this is not part of the routine in your company, ask for it to be included. Certainly, the vast majority of UX designers would not be thrilled to organize and facilitate such sessions. You are guaranteed to go through pain, agony, frustration, happiness and get multiple eye openers, and it will all serve as the stepping stone towards growing a human-centered mindset.Ask for actual, living portraits of the people you serve.

This includes photos and video from contextual studies, stories from their daily life and stories about them. Getting a deeper sense of the people on the other side of the product you develop creates instant empathy and makes it a lot harder to design things that knowingly are bad for them.Insist on continuous testing.

It cannot be emphasized enough how crucial testing is in HCD. This includes early proof of concept tests, prototype tests, and usability tests. A side bonus of early and continuous involvement from the people who are meant to use the product is that it saves money in the long run. The earlier you realize a bad call or error, the cheaper it is to fix.

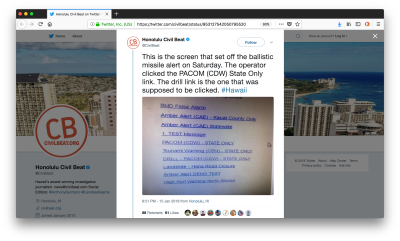

A lot has been said about the nuclear alarm that was triggered by mistake in Hawaii on January 13. However, it’s pretty safe to say that early and continuous testing would have helped prevent it.Always ask “why?”

To start a change of meaning in an organization or community, the first important step is to start asking “why.” Ask why something is being done unethically; ask why you are told to make a black hat feature; question the current state of things.

Ask on what grounds design decisions are being made. If it’s because of what the CEO or someone else thinks, and it has no root in insights from the people you are serving, ask for that validation. Meaning change grows through small steps.

Ethical Design Best Practices

Alongside establishing a human-centered design tradition in the organization, it is also important to make use of the best practices of ethical design. People who do so are part of taking the lead and showing the rest of the organization how things can be done in a more ethical way, all of which will add to the incremental meaning change. Just as dark patterns fall under unethical design, we have White Hat design patterns that can be utilized to ensure ethical design, some of which you can learn about in the following.

Use Data To Improve The Human Experience

Despite numerous companies using data for unethical purposes, such as increasing consumption and traffic, data can, in fact, be used to actually improve the human experience.

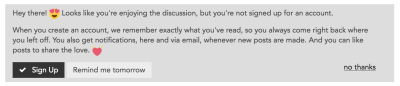

This is the case on the ind.ie forum where setting up an account is suggested as a way to customize your experience by remembering what you’ve read.

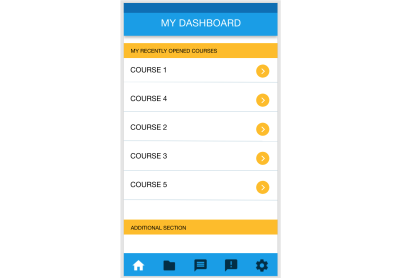

In a current project in which I am involved in making an app for students to more easily access their Learning Management System, we are sorting the student’s individual courses by “last visited”; we know through research that the students most often revisit a small number of pages that relate to the courses they are currently enrolled in. This customization is not designed to change behavior or nudge them into using parts of the app they didn’t intend to. Instead, it is designed to make their experience faster and more efficient.

Another positive example is the American pharmacy Walgreens, who sends out reminders when it’s time to refill on things like vitamins. This is an extremely helpful feature that solves a problem a lot of us have.

Data can help inform research initiatives to understand how you’re not tackling the problems people have when interacting with your product.

Skyscanner, a travel search engine, noticed through their data that their newly launched design didn’t go down well for the people who used their service to fly out of Amsterdam. That data helped inform a research initiative that ultimately lead to a customized solution for people flying to and from Amsterdam that broke down the barriers the new design had initially built (here’s the background story).

Advertising Without Tracking

Advertising is not necessarily unethical. Advertising based on granular data is. It is careless (if not stupid) to rely on a single platform for the majority of a brand’s marketing efforts, especially considering that said platform owns and controls the data the brand uses as the foundation for its ad targeting.

Facebook, Instagram, and Google care just about as little about their advertisers as they do about the people who they see as “users,” i.e. they can make any change they want to, disregarding any consequences it might have for people or businesses using their platform. For example, there was a time when they started blocking fake accounts en masse, which hurt numerous companies whose social media admins had set up fake accounts to administer business pages because they (understandably) didn’t want to use their private accounts for this purpose. This is standard procedure from Facebook who only allows one profile per person (likely because allowing several would contaminate their data tracking).

The platform is always the weakest link in a marketing strategy because it is a third party beyond the control of companies. Thinking back to the 90’s when I worked in marketing at a regional newspaper, granular data tracking was not an option. When a store had placed an ad in the paper about an event, they would simply monitor how many people showed up, and compare that to their expectations to determine the success rate.

While “the good old days” were certainly not good in all aspects, the thought of not basing advertising on granular user data tracking is an appealing thought. John Gruber’s blog, Daring Fireball is an example of a site that doesn’t allow ad tracking. Instead, John Gruber encourages advertisers to add a custom link to their ads enabling them to monitor the click rate on their end.

As John Gruber rightfully states:

“If you pay (say) Facebook for an ad, why in the world would you, the advertiser, trust Facebook’s numbers for how the ad performed?”

— Source

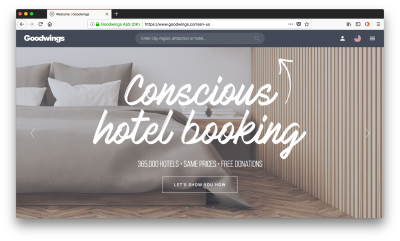

Another great example worth highlighting is Goodwings, a hotel booking site that donates half of their commission to charity. They can do so because of a close collaboration with a large number of NGO’s which means they spend very little on traditional marketing.

And if you think Google Analytics is your only option for collecting meaningful data, think again: Matamo is an open source tool that is installed directly on your own server. It guarantees that data is not shared with advertising agencies such as Google.

Always, Always Prioritize Usability

Surveillance capitalism and data tracking are heavily talked about problems related to ethical design these days. But we must not forget the importance of complying with the best practices of usability. Without it, design is unethical, as a lack of usability almost always entails the use of dark patterns. A good place to go to read more about core usability is Nielsen Norman Group.

Back in the early days, Jakob Nielsen defined 5 core components of usability:

- Learnability,

- Efficiency,

- Memorability

- Errors, and

- Satisfaction.

To ensure a usable product, it’s crucial that these five components are front and center in the design and development process.

Don’t Ask For More Than You Need

As with many other aspects in life, asking for more than you need results in exploitation. It’s common for e-commerce sites to ask for tons of information when people sign up for an account or buy a product. But if someone is buying a digital product (such as a book), there really is no need to ask for anything other than their email address.

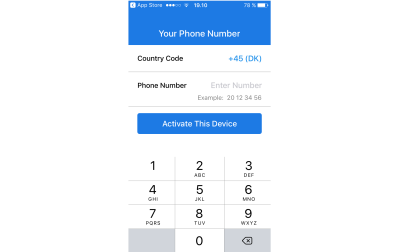

Signal is a great example. Private at its core, it doesn’t ask for anything more than the information that is absolutely necessary for people to start using the app right away.

Be Transparent

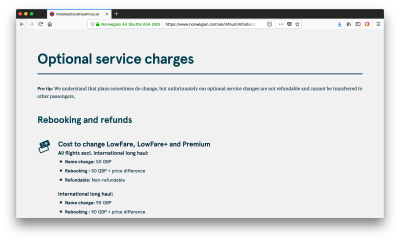

Norwegian.com has perhaps one of the best airfare booking systems in the world. Not only is it convenient to use but they also offer full transparency in relation to their optional service fees, something that is often hidden away. Anyone who lives outside of an Amazon storage country knows how hard it is to find the actual delivery price of their location.

Conclusion

The movement towards a more ethical future has begun. Change doesn’t happen radically short term unless it’s built into the core of the business model. But that doesn’t mean we cannot change the current state for the better. We can do so through incremental change; one step at a time. By working human-centered, by asking why, and by using best practices for ethical design. That’s our obligation as the ones who build products so deeply ingrained in people’s lives. What we do changes and shapes lives for better or for worse. I choose better.

Learn More

There are a lot of valuable resources available for anyone interested in ethical design. Here are a few to get you started:

- IDEO’s Human-Centered Design Kit is extremely helpful to understand HCD and offers a wide range of methods on how to work human-centered.

- Simply Secure is an organization that supports and educates practitioners in ethical design processes. They offer a thorough knowledge base for people interested in building trustworthy technology

- White Hat UX — The Next Generation in User Experience is a book that offers lots of practical advice on how to design experiences that are transparent, honest and ethical. Written by Trine Falbe, Martin Frederiksen, and Kim Andersen.

- Cracked Labs is an independent research institute and a creative laboratory based in Vienna, Austria. It investigates the socio-cultural impacts of information technology and develops social innovations in the field of digital culture. They offer in-depth reports about most things related to privacy.

Further Reading

- How Accessibility Standards Can Empower Better Chart Visual Design

- Editorial Design Patterns With CSS Grid And Named Columns

- Writing CSS In 2023: Is It Any Different Than A Few Years Ago?

- Everything I Know About UX Research I First Learned From Lt. Columbo