Long Live The Test Pyramid

A dear colleague of mine, Jan Philip Pietrczyk, once commented on the developer’s responsibility for writing functional code:

“Our daily work [...] ends up in the hands of people who trust us not only to have done our best but also that it works.”

— Jan Philip Pietrczyk

His words have really stuck with me because it puts our code in the context of the people who rely on it. In this fast-paced world, users trust that we write the best code possible and that our software “simply” works. Living up to this level of trust is a challenge, for sure, and that’s why testing is such a crucial part of any development stack. Testing a process evaluates the quality of our work, validating it against different scenarios to help identify problems before they become, well, problems.

The Test Pyramid is one testing strategy of many. While it’s perhaps been the predominant testing model for the better part of a decade since it was introduced in 2012, I don’t see it referenced these days as much as I used to. Is it still the “go-to” approach for testing? Plenty of other approaches have cropped up in the meantime, so is it perhaps the case that the Test Pyramid is simply drowned out and overshadowed by more modern models that are better fitting for today’s development?

That’s what I want to explore.

The Point Of Testing Strategies

Building trust with users requires a robust testing strategy to ensure the code we write makes the product function how they expect it to. Where shall we start with writing a good test? How many do we need? Many people have grappled with this question. But it was a brief comment that Kent C. Dodds made that gave me the “a-ha!” moment I needed:

“The biggest challenge is knowing what to test and how to test it in a way that gives true confidence rather than the false confidence of testing implementation details.”

— Kent C. Dodds

That’s the starting point! Determining the goal of testing is the most crucial task of a testing strategy. The internet is full of memes depicting bad decisions, many resulting from simply not knowing the purpose of a particular test and how many we need to assert confidence. When it comes to testing, there is a “right ratio” to ensure that code is appropriately tested and that it functions as it should.

2 unit tests. 0 integration tests. pic.twitter.com/K2MZKwr8JT

— DEV Community (@ThePracticalDev) August 2, 2017

The problem is that many developers only focus on one type of testing — often unit test coverage — rather than having a strategy for how various units work together. For example, when testing a sink, we may have coverage for testing the faucet and the drain separately, but are they working together? If the drain clogs, but the faucet continues to pour water, things aren’t exactly working, even if unit tests say the faucet is.

Approaches for testing are often described in terms of shapes, as we’ve already seen one shape with the pyramid model. In this article, I would like to share some of the shapes I have observed, how they have played out in real-world scenarios, and, in conclusion, which testing strategy fits my personal criteria for good test coverage in today’s development practices.

Flashback To The Basics

Before that, let’s revisit some common definitions of different test types to refresh our memories:

- Manual tests

This is testing done by actual people. That means a test will ask real users to click around an app by following scripted use cases, as well as unscripted attempts to “break” the app in unforeseen scenarios. This is often done with live, in-person, or remote interviews with users observed by the product team. - Unit tests

This type of test is where the app is broken down into small, isolated, and testable parts — or “units” — providing coverage by individually and independently testing each unit for proper operation. - Integration tests

These tests focus on the interaction between components or systems. They observe unit tests together to check that they work well when integrated together as a working whole. - End-to-end (E2E) tests

The computer simulates actual user interactions in this type of test. Think of E2E as a way of validating user stories: can the user complete a specific task that requires a set of steps, and is the outcome what’s expected? That’s testing one end of the user’s experience to the other, ensuring that inputs produce proper outputs.

Now, how should those types of testing interact? The Test Pyramid is the go-to metaphor we’ve traditionally relied on to bring these various types of testing together into a complete testing suite for any application.

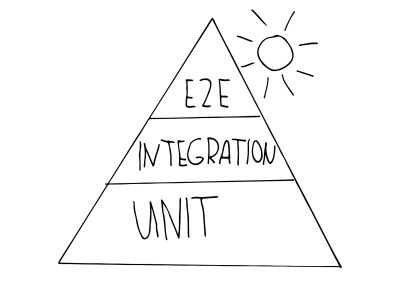

All Hail The Mighty Test Pyramid

The Test Pyramid, first introduced by Mike Cohn in his book Succeeding with Agile, and developed further by Martin Fowler in his “The Practical Test Pyramid” post, prioritizes tests based on their performance and cost. It recommends writing tests with different levels of granularity, with fewer high-level tests and more unit tests that are fast, cheap, and reliable. The recommended test order is from quick and affordable to slow and expensive, starting with many unit tests at the bottom, followed by service, i.e., integration tests in the middle. Following that are fewer, but more specific, UI tests displayed at the top, including end-to-end tests.

There’s a growing sentiment in the testing community that the Test Pyramid oversimplifies how tests ought to be structured. Martin Fowler addressed this in a more recent blog post nearly ten years after posting about the pyramid shape. My team has even questioned whether the model brings our work closer to the end user or further away. While higher levels of the pyramid increase confidence in individual tests and offer better value, it seems less mindful of the bigger picture of how everything works together. The testing pyramid felt like it was falling out of time, at least for us.

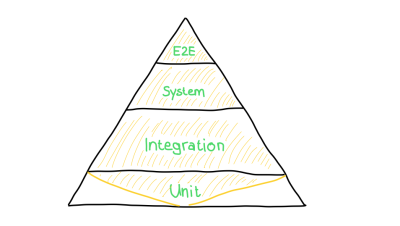

From Pyramids To Diamonds

One point my team discussed internally was the pyramid’s over-emphasis on unit testing. The pyramid is an excellent shape to describe what a unit test is and what scope it covers. But if you ask four people what a unit test is, you will likely get four different answers. Perhaps the shape needs a little altering to clear things up.

The biggest clarification my team needed was where and when unit testing stops. The pyramid shape suggests that unit tests take up the majority of the test process, and that felt off to us. Integration tests are what pull those together, after all.

So, another way we can view the pyramid shape of a testing strategy can is to let it evolve into a diamond shape:

Integration testing is sometimes called the “forgotten layer” of the testing pyramid because it can be too complex for unit testing. But it gets more focus in the Testing Diamond (often split into two specific layers):

- Integration Test Layer

This layer is pretty much the same as what we see in the Test Pyramid, but it is reserved for tests that are considered “too big to be a unit test” — something in between the Unit and Integration Test layers. A test on a specific component would be an ideal sort of thing for this layer. - System Integration Test Layer

This layer is more about “real” integration tests, like data received from an API.

So, the diamond shape implies a process where unit tests are done immediately after integration testing is complete, but with less emphasis on those individual tests. This way, the integration layer gets the large billing it deserves while the emphasis on unit tests tapers off.

Where’s Manual Testing?

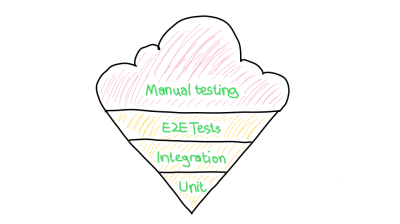

Whether a testing strategy is called a “pyramid” or a “diamond,” it is still missing the critical place of manual testing in the process. Automated testing is valuable, to be sure, but not to the extent that they make manual testing practices obsolete.

I believe automated and manual tests work hand in hand. Automated testing should eliminate routine and common tasks, freeing testers to concentrate on the crucial areas that require more human attention. Rather than replace manual testing, automation should complement it.

What does that mean for our diamond shape… or the pyramid, for that matter? Manual testing is nowhere in the layers but should be. Automated tests efficiently detect bugs, but manual testing is still necessary to ensure a more comprehensive testing approach to provide full coverage. That said, it’s still true that an ideal testing strategy will put a majority of the emphasis on automated tests.

That means the testing strategy looks more like an ice cream cone than either a pyramid or a diamond.

In fact, this is a real premise called the “Ice Cream Cone” approach. Although this approach takes longer to implement, it results in a higher confidence level and more bugs detected. Saeed Gatson provides a succinct description of it in a post that dates back to 2015.

But does a pizza shape actually go far enough to describe the full nature of testing? Gleb Bahmutov has taken this concept to the extreme with what he calls the “Testing Crab” model. This approach involves screenshot comparisons, which a human then verifies for differences. Bahmutov sees visual and functional testing as “the body” of the crab, with all other types of testing serving as “the limbs.” There are indeed tools that provide before-and-after snapshots during a test that, when layered on top of one another, can highlight visual regressions.

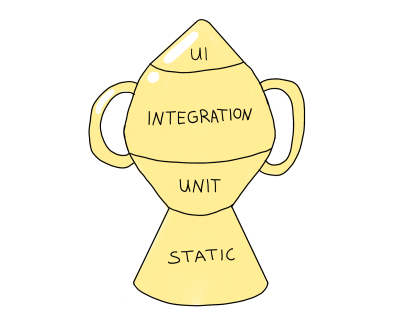

The Testing Trophy

All testing approaches are costly, and the Test Pyramid got that point right. It’s just that the shape itself may not be realistic or effective at considering the full nature of testing and the emphasis that each layer of tests receives. So, what we need to do is find a compromise between all of these approaches that accurately depict the various layers of testing and how much emphasis each one deserves.

I like how simply Guillermo Rauch summed that up back in 2016:

Write tests. Not too many. Mostly integration.

— Guillermo Rauch (@rauchg) December 10, 2016

Let’s break that down a bit further.

- Write tests

Not only because it builds trust but also because it saves time in maintenance. - Not too many

100% coverage sounds nice, but it is not always good. If every single detail of an app is covered by tests, that means at least some of those tests are not critical to the end-user experience, and they are running purely for the sake of running, adding more overhead to maintain them. - Mostly integration

Here is the emphasis on integration tests. They have the most business value because they offer a high level of confidence while maintaining a reasonable execution time.

You might recognize the following idea if you’ve spent any amount of time following the work of Kent C. Dodds. His “Testing Trophy” approach elevates integration testing to a higher priority level than the traditional testing pyramid, which is perfectly aligned with Guillermo Rauch’s assertions.

Kent discusses and explains the important role that comprehensive testing plays in a product’s success. He emphasizes the value of integration tests over testing individual units, as it provides a better understanding of the product’s core functionality and respected behaviors. He also suggests using fewer mockup tests in favor of more integration testing. The testing trophy is a metaphor depicting the granularity of tests in a slightly different way, distributing tests into the following types:

- Static analysis: These tests quickly identify typos and type errors by way of executing debugging steps.

- Unit tests: The trophy places less emphasis on them than the testing pyramid.

- Integration: The trophy places the most emphasis on them.

- User Interface (UI): These include E2E and visual tests and maintain a significant role in the trophy as they do in the pyramid.

The “Testing Trophy” prioritizes the user perspective and boasts a favorable cost-benefit ratio. Is it our top pick? This test strategy is the most sensible, but there is a catch. While unit tests still offer valuable benefits, there are drawbacks to integration and end-to-end tests, including longer runtimes and lower reliability. The benefits of unit tests are valid, and I still prefer to use them.

So, Is The Test Pyramid Dead?

The Test Pyramid is still a popular testing model for software development that helps ensure applications function correctly. However, like any model, it has its flaws. One of the biggest challenges is defining what constitutes a unit test.

My team implemented the modified diamond shape for our testing pipelines. And we’ve found that it’s not entirely wrong, just incomplete. We still gain valuable insights from it, particularly in prioritizing the different types of tests we run.

It seems to me that development teams rarely stick to textbook test patterns, as Justin Searls has summed up nicely:

People love debating what percentage of which type of tests to write, but it's a distraction. Nearly zero teams write expressive tests that establish clear boundaries, run quickly & reliably, and only fail for useful reasons. Focus on that instead.https://t.co/xLceALKrWe

— Justin Searls (@searls) May 15, 2021

This is also true for my team’s experience, as dividing and defining tests is often difficult. And that’s not bad. Even Martin Fowler has emphasized the positive impact that different testing models have had on how we collectively view test coverage.

So, in no way do I believe the Test Pyramid is dead. I might even argue that it is as essential to know it now as ever. But the point is not to get too caught up in its shape or any other shapes. The most important thing to remember is that tests should run quickly and reliably and only fail when there’s a real problem. They should benefit the user rather than simply aiming for full coverage. You’ve already accomplished the most important thing by prioritizing these aspects in test design.

References

- “The Practical Test Pyramid,” Ham Vocke

- “On the Diverse And Fantastical Shapes of Testing,” Martin Fowler

- “The Testing Pyramid Should Look More Like A Crab,” Gleb Bahmutov

- “The Software Testing Ice Cream Cone,” Saeed Gatson

- “Write tests. Not too many. Mostly integration,” Kent C. Dodds

- “The Testing Trophy and Testing Classifications,” Kent C. Dodds

- “Static vs Unit vs Integration vs E2E Testing for Frontend Apps,” Kent C. Dodds